|

I am a PhD student in Computing and Mathematical Sciences at Caltech, advised by Pietro Perona and funded by a Kortschak scholarship. My research interests lie at the intersection of Machine Learning and Computer Vision, with humanitarian and ecology applications. I am particularly interested in developing new techniques and methods to enable non-profits and NGOs and further their efforts. Previously, I was a research engineer at Element AI, where I helped NGOs to tackle human rights violations with Deep Learning (pro-bono). There, I've worked on quantifying the scale and intersectionality of verbal abuse against women and detecting human presence in Darfur and document the Darfur Genocide with Amnesty International. I've also helped Hope Not Hate analyzing fascist groups social media activity during the latest European election. From a research perspective, I collaborated closely with Thomas Boquet to study more closely reproducibility in Machine Learning. I graduated with a Master of Data Science from Illinois Institute of Technology where I was advised by Shlomo Argamon. I also graduated with an EECS MSc and Bachelors at Ecole Superieure d'Informatique, Electronique, Automatique in Paris, advised by Robert Erra and Vincent Guyot. Email / CV / Biography / Google Scholar / LinkedIn |

|

|

I'm interested in computer vision, machine learning, scarce labeled data regime, and AI for Good.

|

|

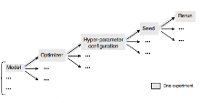

Thomas Boquet*, Laure Delisle*, Denis Kochetkov, Nathan Shucher, Parmida Atighehchian, Boris Oreshkin, Julien Cornebise. 2019, [under review]. ✔ Experimental design methodology, based on linear mixed models, to study and separate the effects of multiple factors of variation in ML experiments |

|

Thomas Boquet*, Laure Delisle*, Denis Kochetkov*, Nathan Shucher*, Boris Oreshkin, Julien Cornebise. ICLR 2019, Workshop on Reproducibility in Machine Learning. ✔ Methodology for testing for statistical differences in model performances under several replications (MAML, Prototypical Networks, TADAM). |

|

Laure Delisle*, Alfredo Kalaitzis*, Krzysztof Majewski, Archy de Berker, Milena Marin, Julien Cornebise. NeurIPS 2018, Workshop on AI for Social Good. ✔ First large-scale study on online abuse against women, in partnership with Amnesty International. |